Difference between revisions of "Data services"

From Lsdf

m |

|||

| (18 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

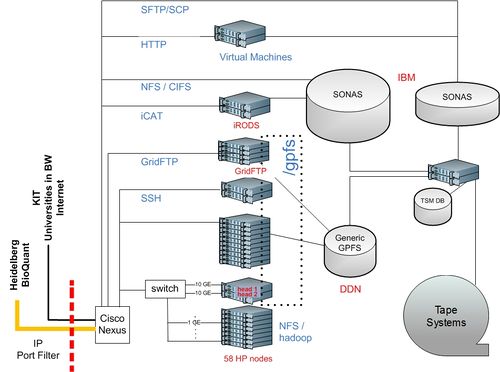

| + | [[File:hw_lsdf.jpg|500px|right]] |

||

| − | The services currently provided by LSDF are: |

||

| + | * Fast reliable storage acessible via standard protocols |

||

| + | * [[Archival services]] for long term access to data |

||

| + | * Cloud storage: [[S3 / REST]] |

||

| + | * [[Hadoop data intensive computing framework]] |

||

| + | * GridFTP [[Data Transfers]] |

||

| + | * HTTP/SFTP [[data sharing service]] |

||

| + | * [[GPU nodes (NVIDIA)]] |

||

| + | == Access and use of services and resources == |

||

| − | * Storage, comprising two systems with 1,4 PB and 500 TB |

||

| + | * Request [[Access to Resources]] |

||

| − | * Cloud computing: OpenNebula cloud environment |

||

| + | * After access is granted, continue to [[registration]] |

||

| − | * Hadoop data intensive computing framework |

||

| + | * About [[LSDF Usage]] |

||

| − | |||

| + | * Acceptible [[Services Usage]] |

||

| − | |||

| − | The "Hadoop cluster" consists of '''58 nodes''' with '''464 physical cores''' in total, each node having: |

||

| − | * 2 sockets Intel Xeon CPU E5520 @ 2.27GHz, 4 cores each, hyperthreading active (16 cores total) |

||

| − | * 36 GB of RAM |

||

| − | * 2 TB of disk |

||

| − | * 1 GE network connection |

||

| − | * OS Scientific Linux 5.5 |

||

| − | ** Linux kernel 2.6.18 |

||

| − | plus '''2 headnodes''', each having: |

||

| − | * 2 sockets Intel Xeon CPU E5520 @ 2.27GHz, 4 cores each, hyperthreading active (16 cores total) |

||

| − | * 96 GB of RAM |

||

| − | * 10 GE network connection |

||

| − | * OS Scientific Linux 5.5 |

||

| − | ** Linux kernel 2.6.18 |

||

| − | All nodes are however SHARED between the different Hadoop tasks and the OpenNebula virtual machines. |

||

Latest revision as of 10:27, 29 July 2016

- Fast reliable storage acessible via standard protocols

- Archival services for long term access to data

- Cloud storage: S3 / REST

- Hadoop data intensive computing framework

- GridFTP Data Transfers

- HTTP/SFTP data sharing service

- GPU nodes (NVIDIA)

Access and use of services and resources

- Request Access to Resources

- After access is granted, continue to registration

- About LSDF Usage

- Acceptible Services Usage