UNICORE and S3: Difference between revisions

Diana.gudu (talk | contribs) |

Diana.gudu (talk | contribs) (→Intro) |

||

| (11 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

== |

==Intro== |

||

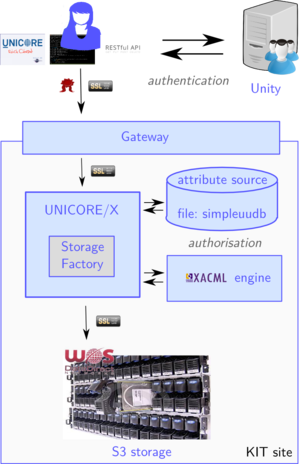

This page describes how to use UNICORE to access S3 storage. The setup integrating the WOS S3 storage with the Human Brain Project's UNICORE infrastructure is depicted in the picture below. The installation and configuration process is described at [http://wiki.scc.kit.edu/lsdf/index.php/UNICORE_and_S3_configuration] |

|||

The UNICORE installation comprises the UNICORE Gateway and the UNICORE/X components, offering the StorageFactory service. Authentication is done via Unity or OIDC. The UNICORE installation will only accept users authenticated via the HBP Unified Portal. The services should also be available via the UNICORE REST API. The mapping of S3 keys to HBP users is done via file attribute sources. |

|||

[[File:hbp-s3-unicore-kit-setup.png|300px]] |

|||

==UNICORE Commandline Client== |

==UNICORE Commandline Client== |

||

===Installation and configuration=== |

|||

To run the UNICORE Commandline Client, you need a Java runtime in version 1.6.0_25 or later. However, Java 7 is highly recommended. |

|||

On Ubuntu, install OpenJDK 7: |

|||

$ apt-get install openjdk-7-jdk |

|||

Download the latest version of the UNICORE Commandline Client ('''ucc''') from [http://sourceforge.net/projects/unicore/files/Clients/Commandline%20Client/7.1.0]. To install the deb package: |

|||

$ dpkg -i unicore-ucc_7.1.0-1_all.deb |

|||

'''ucc''' can be configured by editing the ~/.ucc/preferences file. A minimal configuration for accessing the UNICORE server at KIT should include: |

|||

# truststore configuration for location of trusted certificates |

|||

truststore.type=directory |

|||

truststore.directoryLocations.1=/path/to/truststore/*.pem |

|||

# auth config via unity server in juelich |

|||

# use oidc token OR unity username and password |

|||

authenticationMethod=unity |

|||

unity.address=https://hbp-unic.fz-juelich.de:7112/UNITY/unicore-soapidp-oidc/saml2unicoreidp-soap/AuthenticationService |

|||

# unity.username=<your_unity_username> |

|||

# unity.password=<your_unity_password> |

|||

oauth2.bearerToken=<your_oidc_token> |

|||

registry=https://unicore.data.kit.edu:8080/DEFAULT-SITE/services/Registry?res=default_registry |

|||

===Storage management=== |

|||

The UNICORE Commandline Client offers a simple interface to manage your storages. We will focus here on S3 storage, but most of the examples apply to any type of storage. |

|||

First, you need to create a new storage. To list the possible types of storages that you can create using UNICORE's StorageFactory service, type: |

|||

$ ucc system-info -l |

|||

This will print something like: |

|||

Checking for StorageFactory service ... |

|||

... OK, found 1 service(s) |

|||

* <nowiki>https://unicore.data.kit.edu:8080/DEFAULT-SITE/services/StorageFactory?res=default_storage_factory</nowiki> |

|||

S3 |

|||

User-settable parameters: |

|||

- accessKey: access key |

|||

- secretKey: secret key |

|||

- provider: JClouds provider to use |

|||

- endpoint: S3 endpoint to access |

|||

This means that the service only allows the creation of S3 type storages. When creating a new storage, the user can also set several parameters, such as the S3 access keys, the endpoint and the JClouds provider. However, this is not necessary for the UNICORE setup at KIT, which uses the https://s3.data.kit.edu endpoint by default and performs the mapping between a user's distinguished name (DN) and its S3 keys server-side using UNICORE's file attribute sources. |

|||

The following commands can be used to create a new S3 storage: |

|||

$ ucc create-storage # uses all the default parameters : storage type, endpoint, keys |

|||

$ ucc create-storage -t S3 # set the storage type to S3 and use other parameters' default values |

|||

$ ucc create-storage -t S3 accessKey=... secretKey=... # set the S3 keys in the commandline |

|||

$ ucc create-storage -t S3 @s3.properties # use the settings from file s3.properties (accessKey, secretKey, endpoint) |

|||

The command will print the URL of the created S3 storage: |

|||

<nowiki>https://unicore.data.kit.edu:8080/DEFAULT-SITE/services/StorageManagement?res=bf8189af-2e06-4fae-8e00-63925fe56612</nowiki> |

|||

You can get more information about this resource with the command: |

|||

$ ucc wsrf getproperties '<nowiki>https://unicore.data.kit.edu:8080/DEFAULT-SITE/services/StorageManagement?res=bf8189af-2e06-4fae-8e00-63925fe56612</nowiki>' |

|||

To list all the storages of the current user: |

|||

$ ucc list-storages -l |

|||

Destroying a storage or any UNICORE resource can by done by: |

|||

$ ucc wsrf destroy '<nowiki>https://unicore.data.kit.edu:8080/DEFAULT-SITE/services/StorageManagement?res=bf8189af-2e06-4fae-8e00-63925fe56612</nowiki>' |

|||

For the newly created storage, several operations are available: |

|||

* listing the contents of the storage |

|||

$ ucc ls -l -H '<nowiki>https://unicore.data.kit.edu:8080/DEFAULT-SITE/services/StorageManagement?res=bf8189af-2e06-4fae-8e00-63925fe56612#/</nowiki>' |

|||

This will print something like: |

|||

-rw- 0 2015-02-13 10:43 /bucket2 |

|||

-rw- 0 2015-02-13 10:43 /unicore |

|||

-rw- 0 2015-02-13 10:43 /testbucket |

|||

It is also possible to refer to the resource using a simpler URI scheme. If the storage resource is registered in the registry with the name S3, you can use the following URI: |

|||

'<nowiki>u6://S3</nowiki>' |

|||

However, this form of addressing is slower and generates some network traffic. |

|||

* listing the contents of a specific bucket: |

|||

$ ucc ls -l -H '<nowiki>u6://S3/bucket2</nowiki>' |

|||

will print all the objects in that bucket (paths are relative to storage root): |

|||

-rw- 620.6K 2014-11-25 14:38 /bucket2/object2 |

|||

* copying files to and from the remote storage or between remote storages: |

|||

$ cp <source> <destination> |

|||

$ cp localfile u6://S3 |

|||

$ cp u6://S3/bucket2/* . |

|||

$ cp u6://S3/bucket2/object u6://S3/unicore |

|||

Other commands include ''stat'' for getting information on a file, ''mkdir'' to create a new bucket, ''rm'' to delete a remote file and ''find'' to search for files with names matching a certain pattern. |

|||

All available commands can be found in the [https://www.unicore.eu/documentation/manuals/unicore6/files/ucc/ucc-manual.html ucc user manual] |

|||

==UNICORE REST API== |

==UNICORE REST API== |

||

| Line 27: | Line 107: | ||

===Basic operations on storage using Python=== |

===Basic operations on storage using Python=== |

||

''requests'' is a simple HTTP library for Python that provides most of the functionality you need. However, it is not suited for OAuth authentication. |

'''requests''' is a simple HTTP library for Python that provides most of the functionality you need. However, it is not suited for OAuth authentication. |

||

''rauth'' is built on top of ''requests'' and supports OAuth 1.0, OAuth 2.0 and OFly authentication. There are many other libraries implementing OAuth, such as ''requests_oauthlib'', but in examples below we settled on ''rauth''. |

'''rauth''' is built on top of '''requests''' and supports OAuth 1.0, OAuth 2.0 and OFly authentication. There are many other libraries implementing OAuth, such as '''requests_oauthlib''', but in examples below we settled on '''rauth'''. |

||

To install ''rauth'': |

To install '''rauth''': |

||

$ pip install rauth |

$ pip install rauth |

||

| Line 153: | Line 233: | ||

bytes2up = open(object2up, "rb") |

bytes2up = open(object2up, "rb") |

||

r = rauth_session.put(object2upUrl, data=bytes2up, headers=headers, verify=False) |

r = rauth_session.put(object2upUrl, data=bytes2up, headers=headers, verify=False) |

||

print 'Object ' + object2up + ' uploaded' |

|||

bytes2up.close() |

bytes2up.close() |

||

| Line 163: | Line 242: | ||

==References== |

==References== |

||

*[http://wiki.scc.kit.edu/lsdf/index.php/hidden:UUNICORE_and_S3 Setting up UNICORE with S3] -- internal documentation |

|||

*[http://unicore.eu/documentation/manuals/unicore6/files/gateway/manual.html UNICORE Gateway manual] |

*[http://unicore.eu/documentation/manuals/unicore6/files/gateway/manual.html UNICORE Gateway manual] |

||

*[http://unicore.eu/documentation/manuals/unicore6/files/unicorex/unicorex-manual.html UNICORE/X manual] |

*[http://unicore.eu/documentation/manuals/unicore6/files/unicorex/unicorex-manual.html UNICORE/X manual] |

||

Latest revision as of 10:41, 15 May 2015

Intro

This page describes how to use UNICORE to access S3 storage. The setup integrating the WOS S3 storage with the Human Brain Project's UNICORE infrastructure is depicted in the picture below. The installation and configuration process is described at [1]

The UNICORE installation comprises the UNICORE Gateway and the UNICORE/X components, offering the StorageFactory service. Authentication is done via Unity or OIDC. The UNICORE installation will only accept users authenticated via the HBP Unified Portal. The services should also be available via the UNICORE REST API. The mapping of S3 keys to HBP users is done via file attribute sources.

UNICORE Commandline Client

Installation and configuration

To run the UNICORE Commandline Client, you need a Java runtime in version 1.6.0_25 or later. However, Java 7 is highly recommended.

On Ubuntu, install OpenJDK 7:

$ apt-get install openjdk-7-jdk

Download the latest version of the UNICORE Commandline Client (ucc) from [2]. To install the deb package:

$ dpkg -i unicore-ucc_7.1.0-1_all.deb

ucc can be configured by editing the ~/.ucc/preferences file. A minimal configuration for accessing the UNICORE server at KIT should include:

# truststore configuration for location of trusted certificates truststore.type=directory truststore.directoryLocations.1=/path/to/truststore/*.pem # auth config via unity server in juelich # use oidc token OR unity username and password authenticationMethod=unity unity.address=https://hbp-unic.fz-juelich.de:7112/UNITY/unicore-soapidp-oidc/saml2unicoreidp-soap/AuthenticationService # unity.username=<your_unity_username> # unity.password=<your_unity_password> oauth2.bearerToken=<your_oidc_token> registry=https://unicore.data.kit.edu:8080/DEFAULT-SITE/services/Registry?res=default_registry

Storage management

The UNICORE Commandline Client offers a simple interface to manage your storages. We will focus here on S3 storage, but most of the examples apply to any type of storage.

First, you need to create a new storage. To list the possible types of storages that you can create using UNICORE's StorageFactory service, type:

$ ucc system-info -l

This will print something like:

Checking for StorageFactory service ...

... OK, found 1 service(s)

* https://unicore.data.kit.edu:8080/DEFAULT-SITE/services/StorageFactory?res=default_storage_factory

S3

User-settable parameters:

- accessKey: access key

- secretKey: secret key

- provider: JClouds provider to use

- endpoint: S3 endpoint to access

This means that the service only allows the creation of S3 type storages. When creating a new storage, the user can also set several parameters, such as the S3 access keys, the endpoint and the JClouds provider. However, this is not necessary for the UNICORE setup at KIT, which uses the https://s3.data.kit.edu endpoint by default and performs the mapping between a user's distinguished name (DN) and its S3 keys server-side using UNICORE's file attribute sources.

The following commands can be used to create a new S3 storage:

$ ucc create-storage # uses all the default parameters : storage type, endpoint, keys $ ucc create-storage -t S3 # set the storage type to S3 and use other parameters' default values $ ucc create-storage -t S3 accessKey=... secretKey=... # set the S3 keys in the commandline $ ucc create-storage -t S3 @s3.properties # use the settings from file s3.properties (accessKey, secretKey, endpoint)

The command will print the URL of the created S3 storage:

https://unicore.data.kit.edu:8080/DEFAULT-SITE/services/StorageManagement?res=bf8189af-2e06-4fae-8e00-63925fe56612

You can get more information about this resource with the command:

$ ucc wsrf getproperties 'https://unicore.data.kit.edu:8080/DEFAULT-SITE/services/StorageManagement?res=bf8189af-2e06-4fae-8e00-63925fe56612'

To list all the storages of the current user:

$ ucc list-storages -l

Destroying a storage or any UNICORE resource can by done by:

$ ucc wsrf destroy 'https://unicore.data.kit.edu:8080/DEFAULT-SITE/services/StorageManagement?res=bf8189af-2e06-4fae-8e00-63925fe56612'

For the newly created storage, several operations are available:

- listing the contents of the storage

$ ucc ls -l -H 'https://unicore.data.kit.edu:8080/DEFAULT-SITE/services/StorageManagement?res=bf8189af-2e06-4fae-8e00-63925fe56612#/'

This will print something like:

-rw- 0 2015-02-13 10:43 /bucket2 -rw- 0 2015-02-13 10:43 /unicore -rw- 0 2015-02-13 10:43 /testbucket

It is also possible to refer to the resource using a simpler URI scheme. If the storage resource is registered in the registry with the name S3, you can use the following URI:

'u6://S3'

However, this form of addressing is slower and generates some network traffic.

- listing the contents of a specific bucket:

$ ucc ls -l -H 'u6://S3/bucket2'

will print all the objects in that bucket (paths are relative to storage root):

-rw- 620.6K 2014-11-25 14:38 /bucket2/object2

- copying files to and from the remote storage or between remote storages:

$ cp <source> <destination> $ cp localfile u6://S3 $ cp u6://S3/bucket2/* . $ cp u6://S3/bucket2/object u6://S3/unicore

Other commands include stat for getting information on a file, mkdir to create a new bucket, rm to delete a remote file and find to search for files with names matching a certain pattern. All available commands can be found in the ucc user manual

UNICORE REST API

Description

The base URL of the the REST API for a UNICORE/X container is:

https://<gateway_url>/SITENAME/rest/core

We will abbreviate this URL as BASE in the text below. For the UNICORE instance at KIT, BASE is:

https://unicore.data.kit.edu:8080/DEFAULT-SITE/rest/core

The REST API provide access to several resources handled by the UNICORE/X instance, such as the target system services available to the user (sites), the available storages or the server-to-server file transfers. Furthermore, users can manage their jobs and submit new jobs to target systems.

To list the available resources, use your username and password to get the base URL with curl:

$> curl -k -u user:pass -H "Accept: application/json" BASE

which will output:

{

"resources": [

"BASE/storages",

"BASE/jobs",

"BASE/sites",

"BASE/transfers"

]

}

This page is only dedicated to storage operations, but check the API reference and examples in the official documentation for information on the other resources.

Basic operations on storage using Python

requests is a simple HTTP library for Python that provides most of the functionality you need. However, it is not suited for OAuth authentication.

rauth is built on top of requests and supports OAuth 1.0, OAuth 2.0 and OFly authentication. There are many other libraries implementing OAuth, such as requests_oauthlib, but in examples below we settled on rauth.

To install rauth:

$ pip install rauth

First, we can set-up a basic OAuth2 session and check if we can access the server. In setting up the authentication using an OIDC token, we assume we have the token saved in the 'oidcToken' file. rauth also supports obtaining the access token from the OAuth service, if the client has an id and a secret.

#!/usr/bin/env python import json import time from rauth import OAuth2Session base = "https://unicore.data.kit.edu:8080/DEFAULT-SITE/rest/core" print "Accessing REST API at ", base clientId = "portal-client" # the client id for HBP Portal users file = open("oidcToken","r") btoken = file.read().replace('\n',) rauth_session = OAuth2Session(client_id=clientId, access_token=btoken) headers = {'Accept': 'application/json'} r = rauth_session.get(base, headers=headers, verify=False) if r.status_code!=200: print "Error accessing the server!" else: print "Ready." print json.dumps(r.json(), indent=4)

Listing the existing storages can be done by a simple get request to the url BASE/storages:

headers = {'Accept': 'application/json'}

r = rauth_session.get(base+"/storages", headers=headers, verify=False)

storagesList = r.json()

print json.dumps(r.json(), indent=4)

The code outputs something like:

{

"storages": [

"https://unicore.data.kit.edu:8080/DEFAULT-SITE/rest/core/storages/f13b3ffa-413a-4ab7-a620-e8e04291a5f5",

"https://unicore.data.kit.edu:8080/DEFAULT-SITE/rest/core/storages/52126d09-8ea6-4b06-84f8-205839bb5bdc"

]

}

Getting information on the second storage listed:

headers = {'Accept': 'application/json'}

s3storageUrl = storagesList['storages'][1]

r = rauth_session.get(s3storageUrl, headers=headers, verify=False)

s3storageProps = r.json()

print json.dumps(r.json(), indent=4)

This will print:

{

"resourceStatus": "READY",

"currentTime": "2015-02-12T17:41:52+0100",

"terminationTime": "2015-02-20T14:39:03+0100",

"umask": "77",

"_links": {

"files": {

"href": "https://unicore.data.kit.edu:8080/DEFAULT-SITE/rest/core/storages/52126d09-8ea6-4b06-84f8-205839bb5bdc/files",

"description": "Files"

},

"self": {

"href": "https://unicore.data.kit.edu:8080/DEFAULT-SITE/rest/core/storages/52126d09-8ea6-4b06-84f8-205839bb5bdc"

}

},

"uniqueID": "52126d09-8ea6-4b06-84f8-205839bb5bdc",

"owner": "CN=Diana Gudu 123456,O=HBP",

"protocols": [

"BFT"

]

}

Listing the files (in case of S3 storage, the buckets) in the previously selected storage:

headers = {'Accept': 'application/json'}

bucketBase = s3storageProps['_links']['files']['href']

r = rauth_session.get(bucketBase, headers=headers, verify=False)

bucketList = r.json()

print json.dumps(r.json(), indent=4)

The code will output:

{

"isDirectory": true,

"children": [

"/bucket2",

"/unicore",

"/testbucket"

],

"size": 0

}

Listing the objects in the first bucket:

headers = {'Accept': 'application/json'}

bucket = bucketList['children'][0]

bucketUrl = bucketBase + bucket

r = rauth_session.get(bucketUrl, headers=headers, verify=False)

objectList = r.json()

print json.dumps(r.json(), indent=4)

This will print a similar list as for the buckets. Note that the paths listed are relative to the storage root and not the parent directory:

{

"isDirectory": true,

"children": [

"/bucket2/object2"

],

"size": 0

}

Downloading the first file in the first bucket and then saving it locally:

headers = {'Content type': 'application/octet-stream'}

object2down = objectList['children'][0]

object2downUrl = bucketBase + object2down

r = rauth_session.get(object2downUrl, headers=headers, verify=False)

objectName = object2down.split('/')[-1]

fileOut = open(objectName, "wb")

fileOut.write(r.content)

fileOut.close()

To only get the file info, not the data, the media type should be json instead of octet-stream

headers = {'Accept': 'application/json'}

r = rauth_session.get(object2downUrl, headers=headers, verify=False)

print json.dumps(r.json(), indent=4)

Uploading a file in the first bucket. In the code below, we upload the file 'test' from the current directory:

headers = {'Content': 'application/octet-stream'}

object2up = "test"

object2upUrl = bucketUrl + "/" + object2up

bytes2up = open(object2up, "rb")

r = rauth_session.put(object2upUrl, data=bytes2up, headers=headers, verify=False)

bytes2up.close()

To delete the uploaded object:

headers = {'Accept': 'application/json'}

r = rauth_session.delete(object2upUrl, headers=headers, verify=False)

The whole Python script can be found here.